Albert has an interesting job. He takes the manufacturing software the plant operators use, and creates simulation software to train operators. I started working with him when his company selected some new software, and he needed suggestions on how to do things like make time stop.

He called recently wondering why the software we’d developed quit working, and now wouldn’t compile. We spent a half hour discussing this line, that line, adding lines, commenting lines, and getting no where. Since my code compiled, I finally suggested he send me HIS copy of the code. The problem turned out to be a cross communication involving 4 lines of code (located in two different modules).

Somehow, for some reason, as we looked at the code, neither of us put the descriptions of what we saw together with the results Albert described. It wasn’t until I had his code that I found the difficulty. And why was that?

We were congruently communicating. We both wanted the same goal, but the longer we talked about the problem, the more frustrating it became. The Satir Interaction Model wasn’t helping. It turned out that the problem didn’t exist at the language level. It existed in the process of GETTING TO the language level.

Yet Another Model (YAM)

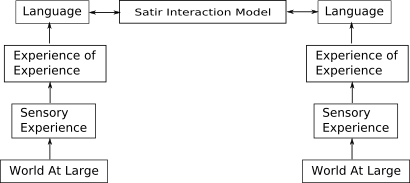

Here’s a model that shows one way of getting from the REAL WORLD, to the language level. I lifted this from Maps, Models, and the Structure of Reality.

Like all models, this model deletes, generalizes, and distorts information. I’ll probably blog more about this someday.

The real question we need to consider is: “Does this model provide a useful map of the territory?” To me it does. It shows (some of the) transforms we make between the “World Out There”, finally ending in language. Just so you’re not surprised, each logical level involves its own model, and moving from one logical level to the next one or more processes.

When we add the bi-directional (simplified) Satir Interaction Model, we get a more complete understanding of the problem Albert and I experienced.

In our case, the World At Large differed. When moving from his World At Large, Albert deleted information (and we all do). Even though I tried, I couldn’t “language” down his modeling path to understand his World At Large. Once I could see his World At Large, finding the compile problem became much easier. Our worlds were the same.

So the next time you’re talking with someone, and you see the conversation isn’t working, remember:

Even though you’re talking the same language, how you got to the language makes the meaning of the language.